AI Ethics Twitter Book Chat Safiya Noble Algorithms of Oppression

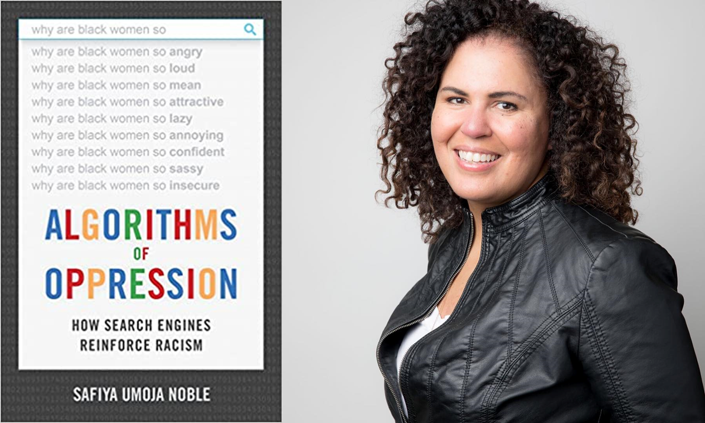

In our June AI Ethics Book Chat, we invited author and scholar Dr. Safiya Noble @SafiyaNoble to discuss their powerful book “Algorithms of Oppression.”

Mia Shah-Dand: Please tell us more about your first encounter with racism in search that inspired your powerful book?

Safiya Noble: to do research in this area. I spent a lot of time thinking about this in the context of CritLib while in a doctorate program @iSchoolUI and I was studying and learning from @lnakamur @AngharadValdivi and so many great scholars at Illinois. I had been critically looking at Google search because @sivavaid wrote the book that inspired me the most: https://ucpress.edu/book/9780520272897/the-googlization-of-everything and I was also influenced by the writings of @DocDre @halavais & wanted to put this digital media studies and info-sci in dialogue with Black feminists who had influenced me my whole life: Bell Hooks, Black Feminist Thought and, Angela Y. Davis.

I was finding lots of racist & sexist results in search. During that time, @DocDre said to me, “you should see what happens when you search for Black girls.” I did see. I saw again & again, and that became the leading example for my book and, I was thinking about the beautiful Black children in my life & how my girls were growing up in a world where their identities were being subject to porn being the primary representation of “Black girls” in commercial search

MSD: What is the role of (search) advertising and advertisers in shaping the popular narrative about culture and society?

SN: Commercial search engines play a huge role in our everyday lives. We use them for complex & banal kinds of queries, and search shapes our thinking & actions. Most people think of search as neutral/credible & not as an advertising engine. I tried to parse how search works so that we could resist thinking of the major advertising/media firms as “public libraries” — it’s not accurate. @ubiquity75 wrote a great piece on that https://illusionofvolition.com/2018/03/20/no-youtube-is-not-a-library-and-why-it-matters/

As I was writing the book, I was giving talks to K-12 teachers, librarians, and on college campuses about search bias & what’s at stake. Meanwhile, “Just Google It” became the prevailing mantra. In reality, we need more research on search. Ultimately, those who pay to optimize their content & those with strong technical skills, are able to make themselves *more* visible than others, while the public things a magical algorithm is giving them “the best” results. People like @JessieNYC showed us that racism/racists have more power online. In the context of Silicon Valley writ large, many tech CO’s are printing money off the backs of vulnerable people. I study these problems so we can address it.

MSD: Is bias in library discovery systems and cataloging systems the result of unintentional misclassification or deliberate racism?

SN: There is little doubt among most CritLib scholars that libraries are implicated in structural racism because they have played a major role in both allowing or prohibiting access to knowledge. They’ve upheld & perpetuated racist hegemonies. There is no way to talk about structural racism without including the racist practices of classification of people, species & ideas. The oppressive consequences of classification are the histories of science/knowledge organization. My work addresses knowledge/info workers: from librarians to software engineers. We need to know the violent, racist & sexist power of classification schemes, because most digital technologies & AI are predicated up on these very same logics.

MSD: You’ve said that lack of diversity at companies like Yelp, Facebook is reflected in their search engines, which don’t understand language of the Black community. What are some examples of this?

SN: The lack of diversity in Silicon Valley (and beyond) is certainly an issue — from not understanding the many cultures of Black people, to making products that are designed to capitalize upon us and/or harm us. How you frame the problems of racism and sexism in the tech sector also determines the kind of interventions you can imagine. I like this piece by @WillOremus https://onezero.medium.com/five-ideas-to-make-silicon-valley-less-racist-7a1069ad05f8 that just came out as a way into this conversation: Of course we should address Silicon Valley’s abysmal record on diversity. We need regulations from making racist/sexist/oppressive tech that undermines democracy and foments harm against the public. The sector has too little accountability

MSD: How do we address Silicon Valley culture that continues to frame lack of representation as a “pipeline” problem instead of as an issue of racism and sexism?

SN: This question reminds me of how I love the reporting from @jguynn because she’s a great disrupter of the tired narrative that there are no people of color in the pipeline for tech jobs. https://usatoday.com/story/tech/news/2016/07/15/facebook-diversity-pipeline-silicon-valley/87142058/ and because we are on Twitter and I like to be cheeky here, I will say that blaming all the problems on the Black people (it’s your fault we are racist and make racist tech because you didn’t major in computer science), is nonsense. And although I go into this in my book, I will point those who didn’t get a chance to read it to this excerpt. I think it helps answer this question too. Now, we need to start talking about restoration/reparation. https://wired.com/story/social-inequality-will-not-be-solved-by-an-app/

MSD: “If Google engineers are not responsible for design of their algorithms then who is?” What does true accountability in tech look like?

SN: I think the tech sector is on notice because of the important work of so many people — more than I can name here: @hypervisible @mutalenkonde @ubiquity75 @ruha9 @merbroussard @FrankPasquale @sivavaid @juliapowles @biancawylie @daniellecitron

Big Tech is responsible for their their algorithms/AI and they need to be accountable. We need more @RepAOC ‘s and @mutalenkonde ‘s in the policy space. There are many who keep unearthing and exposing the lack of and we need support too. True accountability is going to come from many vantage points: tech workers in the global supply chain, activists, voters, legislative bodies, journalists, artists & makers, researchers, and companies– all working to intervene & reimagine

We need to center those oppressed by the visions of the future that Silicon Valley exports. We need to redirect those resources into social equity. This includes #DefundThePolice & surveillance state tech, and refunding public goods

Q7 via @Altrishaw @safiyanoble

MS: Should the control tech companies have over search curation, data, and algorithms to curate search content outcomes be transparent? How do you see this happening; how to engender fairness and accountability?

SN: They are fairly transparent to advertisers about how it works. And, there is a Fairness in AI movement, which calls for more transparency. We should add to that frameworks of justice to undergird regulation & recall of some tech too. I am also thrilled to be in dialogue with @feminist_ai @idealblakfemale and WCCW who are running a virtual book club at https://womenscenterforcreativework.com/events/algorithms-of-oppression/ to gather folks to have a lot of important conversations about the role of tech in our lives.

Q8 from @_Vcopeland our very first eBook recipient. Timely question given recent events.

MS: How would you address statements that ascribe bias to the dataset, such that fixing the dataset that underpins the algorithm will fix the bias?

SN: I’ve been watching what Dr. @timnitGebru has been going through in trying to help our colleagues in ML understand you cannot simply “unbias the data” and everything becomes “fair.” Data is derived from many intersecting power relations. I would look at the work of @schock and @katecrawford too regarding data & metadata, how ML algorithms are trained. Many of us are writing about tech and data as a social construction. “Data” is an abstraction and flattening power relations.

Thank you so much for this insightful discussion. Join us again for our July Twitter chat brilliant Data Scientist Ayodele Odubela @DataSciBae for a timely conversation on “Increasing Equity in AI” for marginalized and underrepresented groups.

Increasing Equity in AI