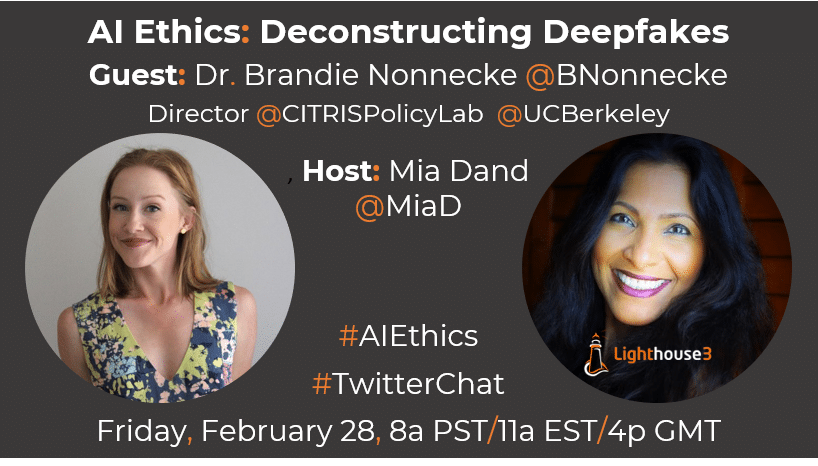

For our February AI Ethics Twitter Chat, we invited expert guest, Dr. Brandie Nonnecke, Founding Director, Citris Policy Lab at UC Berkeley to discuss “Deconstructing Deepfakes”.

Mia Dand: Dr. Nonnecke, Welcome and let’s start off with the basics, what are deepfakes?

Dr. Brandie Nonnecke: “Deepfakes” are deceptive audio or visual media created with AI to depict real people saying or doing things they did not. The term “deepfake” is a portmanteau of “deep learning” (a type of machine learning) & “fake”. Don’t confuse deepfakes w/ “cheap fakes” or “shallow fakes”, which are created w/out AI. The slowed video of @SpeakerPelosi to make her appear drunk is a good example. While cheap/shallow fakes are less complicated to make, they can be more detrimental than deepfakes.

MD: Please explain how deepfakes are created.

BN: Deepfakes are created using sophisticated machine learning techniques called generative adversarial networks (GANs). They draw upon a huge amount of training data and “learn” how to generate content.

MD: What was the original intent behind the creation of deepfakes? Was it malicious or is it an example of good tech gone bad?

BN: Creating realistic synthesized imagery has a decades long history in computer science. In late 2017 a Reddit user named “deepfakes” posted realistic videos that swapped celebrity faces into pornographic videos. The field exploded & today most deepfakes are pornographic. (1/2)

MD: In your op-ed in Wired, you say that the California’s “Anti-Deepfake Law” is too feeble. Can you please break down the key issues you see with it?

BN: Absolutely! Deepfakes pose a serious threat to election integrity. Lawmakers have pushed forward legislation to mitigate harmful effects of this technology in the #2020election. Both California & Texas have passed laws & federal legislation has been proposed. California’s “Anti-Deepfake Law” makes it illegal to spread a malicious deepfake within 60 days of an election. However, 4 significant flaws will likely make it ineffective: timing, misplaced responsibility, burden of proof, & inadequate remedies. Timing: The law only applies to malicious deepfakes spread within 60 days of an election & protects satire & parody. However, it’s unclear how to effectively & inefficiently determine these criteria. Lengthy reviews will enable content to spread like wildfire? Misplaced Responsibility: The law exempts platforms from having to monitor & stem the spread of deepfakes. This is due to Section 230 of the Communications Decency Act, which provides platforms w/ a liability safeguard against being sued for harmful user-generated content. Burden of Proof: The law only applies to deepfakes posted with “actual malice.” Convincing evidence, which is often difficult to obtain, will be necessary to determine intent. For flagged content, a lengthy review process will likely ensure the content continues to spread. Inadequate Remedies: Malicious deepfakes will be able to spread widely before detection & removal. There is no mechanism established to ensure those who were exposed also receive a notification of the intent & accuracy of the content. Harm from exposure will go unchecked.

MD: What would you like to see in future laws? And what are some other remedies outside of law?

BN: I’d like to see laws put more of the onus to police content on platforms & provide greater clarity on how to efficiently & effectively determine intent. Outside of law, there are some promising technical & governance strategies emerging. Platforms are taking a more proactive role in supporting the development of technical strategies to identify & stop the spread of harmful deepfakes. The Deepfake Detection Challenge led by @aws @facebook @Microsoft @PartnershipAI is one example https://deepfakedetectionchallenge.ai/. Greater accountability is needed from platforms for their role in building systems that spread malicious deepfakes. We’ve seen some promising moves from @Facebook & @Twitter in the past weeks to ban malicious deepfakes & label suspicious content, which makes me hopeful.

MD: Lastly, how can folks avoid being duped by deepfakes, given that their usage will likely spike during upcoming elections?

BN: Most deepfakes are not sophisticated enough to trick the viewer. Glitchy movements, an off cadence are tell-tale signs that something’s amiss. Carefully review the content for inconsistencies or flaws & search for whether a trusted entity has evaluated the veracity of the content. Everyone has a critical role to play to mitigate the spread of malicious deepfakes. Take caution in spreading content that you don’t know is verified. Altogether now.. .When in doubt ?♀, don’t share it out!? IMO, the *threat* of a deepfake will cause more harm in the #2020election than a deepfake itself. Nefarious actors will cry “deepfake!” on content they don’t like to sow confusion. This is what Danielle Citron & Bobby Chesney call the Liar’s Dividend: https://papers.ssrn.com/sol3/papers.cfm?abstract_id=3213954 When there’s no definitive truth, everything’s a lie.

MD: (From Blakeley Payne) Papers on the subject commonly write about preventing or possible abuse of better systems? Or does it not matter because there are large gaps between software by academics vs proprietary software written for, say, a film?

BN: Great question! Academics are making great strides in developing ML tools to detect deepfakes. There is a risk of a deepfakes arms race where the technology to detect doesn’t outpace the development of more convincing deepfakes.

Join us again next month on Friday, Mar 27 at 8a PT for some good ol’ fashioned “AI Myth-Busting” with our expert guest, Dr. Dagmar Monett who will help us separate fact from fiction/hype in AI, starting with what AI is and isn’t.