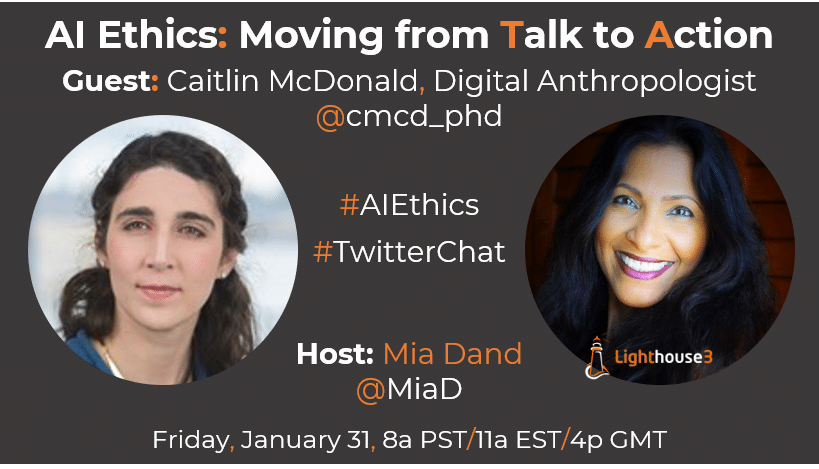

In our monthly AI Ethics Twitter Chat for January, we invited Dr. Caitlin McDonald, award-winning scholar and Digital Anthropologist at Leading Edge Forum to discuss how organizations can take AI Ethics from Talk to Action. Here are key highlights from our very insightful chat.

Mia Dand: Let’s start with the basics, why does ethics in AI matter? What are some risks/harms associated with unethical AI?

Dr. Caitlin McDonald: Ethics in AI is such an important issue—whether you’re a data scientist or not, your work, your private life & consumer life, & your participation in civic society are all going to be impacted by ever-increasing amounts of automated data processing.

You may not see it directly, but it’s woven into the fabric of how you interact with all these institutions. This means that data scientists, business leaders, & everyone working to build AI systems have an enormous responsibility to make sure they’re building AI that works for everyone.

People in positions of power need to advocate for those who do not have the technical knowledge & power to assess fairness in automated systems.

This is one way of building accountability, as is designing systems to be more transparent & explainable so the assessment of fairness is possible. & most of all, building in accountability mechanisms & governance so that people do have power & agency to redress wrongs.

From a business perspective, I think there are four key harms leaders need to consider as they rely more & more on AI:

- Customers will abandon you for more ethical service providers.

- Talent will leave you for more ethical employers.

- You will do great public harm through unintentionally exacerbating systemic inequalities.

- You will lose the competitive edge of technical prowess to companies or countries with ethics that endanger your employees, your customers or the public at large.

MD: When putting ethics into action, the first question that comes up is who should govern ethics of AI in an organization?

CM: This is such an important question right now because there’s really no consensus yet in businesses: does it belong in Ethics & Compliance? Typically, great on legal matters but not yet up to speed with technological concerns. If your org has a scientific research unit (for example in medical & pharmaceuticals) does it belong with the ethical processes for human testing? What about at the earliest stages of technology development? Does the design team get a say in all of this?

I often find the conversation about governance is split between those who believe in taking an open-ended approach grounded in the tools & techniques of design thinking (e.g. who are the stakeholders?

What are the possible outcomes of this proposed change?) & those who believe in a structured, rule-based approach. But the reality is that both of these approaches have their merits at particular times, & you can observe them in action by looking to historical examples of other industries.

Heavily regulated industries like insurance, health, banking, utilities, etc. all have very well-established frameworks, usually enshrined in law or sometimes in industry best practice, for assessing risk & setting out the right or expected way of doing things.

That’s not to say those industries never experience problems, they absolutely do! But they usually have mechanisms for outlining expected standards, assessing where those haven’t been met, & addressing what went wrong.

But in the early days, none of those regulatory mechanisms existed, because it wasn’t possible to know for certain what the risks & benefits were: this is like the new automated solutions that are developing so rapidly today.

They’re full of promise & full of risks, & we just don’t know yet what the medium- & long-term impacts will be.

So the best tools available to us in this world of unknowns are all about making reasonable conjectures about the possible harms & benefits—especially where those will disproportionately impact different groups—and designing around those.

As automated decision systems become ever more pervasive their risks & rewards will become clearer, & we can build more established legal & industry standards & accountability structures around them.

MD: Where should organizations start with ethical AI & how should they prioritize?

CM: This will be a really unique journey for every organization, it really depends on what the starting point is. If you’re a huge global corporation, your biggest worry might be managing different legislation across national & regional boundaries.

If you’re a small startup, you might be more focused on developing techniques for identifying bias in your machine learning models.

At the end of the day most ethical issues aren’t born of malice but carelessness: lack of oversight, lack of diversity of thought in design, lack of accountability mechanisms, lack of deliberation time for uncovering & addressing risks.

If my angry boss is looming over my shoulder, I’m probably going to pay more attention to him than to the risks that might affect people I’ll never meet.

More ethical errors are born from rushing through things & poorly thought-out incentives rather than a deliberate desire to do harm.

Which isn’t to say we shouldn’t be on the lookout for deliberate harm, & we’ve certainly seen a lot of consumer & worker organizing around harmful practices over the last five years. Twitter is a great place to see that evolving.

MD: How do you realize financial & cultural gains from ethical technology use?

CM: Most businesses are really starting from the point of viewing ethics as a risk management tool: they usually see it as a way of preventing bad things from happening. But actually ethics has so much more potential than that.

Ethics isn’t just about what NOT to do, & what harms to avoid: it’s also about identifying what harms are already happening which could be changed through innovation.

We’ve seen a lot of news about bias in hiring, in health, & other areas, & we absolutely shouldn’t use these tools if they’re replicating or even exacerbating existing societal harms.

But the great thing is that these tools are also opening up the conversation about where these harms are happening & what it would take to fix them—not just in technology but in society itself.

A really critical benefit of taking a firm ethical stance for building technology is in talent acquisition & retention: A 2019 study by think tank Doteveryone showed that technologists in general & data scientists in particular are really adamant about working for responsible employers.

Businesses are underestimating the power of a loyal consumer base that would want to buy more ethical products: yes there are plenty of counterexamples but we’ve also seen consumers seek out better/fairer products, though that can mean a lot of different things.

That difference is why we might see very different strategies for pressuring businesses to behave differently: what aspects of ethics are you focusing on? How are you prioritizing? This is why different products or companies get such different reactions from various groups seeking a more ethical future.

MD: Ethics sounds great in theory but it’s challenging to put in practice because of conflicts in values & incentive systems. How do you balance conflicting ethical concerns?

CM: The best advice I have about this is that when things go wrong—& they almost certainly will–you want to be able to demonstrate that you made the best choice you could with the information you had.

This typically means starting from a clear set of guiding principles, usually developed in consultation with a range of stakeholders and experts: customers, lawyers, engineers, people who might in any way be impacted by or partake in making a piece of technology.

You also don’t need to start from scratch on this: you can look to industry guidance for existing frameworks and guidelines, like the IEEE Ethically Aligned Design manual, or the EU’s ethics guidelines for trustworthy AI among others. In fact there are tons of codes out there.

The important thing is how to get those from lovely vision statements into practical action. Once you’ve devised or chosen the principles you’re going to use, you need a governance mechanism for making and capturing decisions, so that when really sticky situations come up – the kind where there are no clear winners and it’s a matter of making trade-offs to find the least worst option—you can clearly draw on your principles to come to a decision.

Ideally there is an accountability mechanism so a decision like this can be audited and appropriate measures taken if stakeholders who are impacted by the decision disagree with the course of action that was taken.

The keyword here is accountability: be clear about who needs to be held accountable, and to whom they need to be held accountable.

MD: The space of AI keeps constantly evolving, what are some effective way of keeping up with emerging practices?

CM: I personally find this terrifying! The rate of change is overwhelming & there’s no feasible way to keep up with all the advancements in the field. I think the first step is narrowing your focus so you can prioritize your information sources.

What do you need to know in depth & where can you be content with a three-sentence overview? That helps prioritize which newsletters to subscribe to and events to attend.

The ethics community in AI is particularly passionate and motivated, so fortunately they’re very willing to engage with like-minded people. I often find my inbox and Twitter feed inundated by “have you seen this???” messages about the latest AI scares—& occasionally by more hopeful articles too!

But a great thing about being part of such an active community is the feeling that there are people out there very actively working towards making things better for everyone.

Many thanks to Dr. Caitlin McDonald for her time and terrific insights.

Join us again for our monthly AI Ethics Twitter Chat on February 28th as we’ve invited Dr. Brandie Nonnecke to discuss the ethics of Deep Fakes.