For our April 2020 AI Ethics Twitter Chat we invited Sasha Costanza-Chock discuss their powerful book on a very timely topic “Design Justice: Community-Led Practices to Build the Worlds We Need”.

Buy the book => https://www.kobo.com/us/en/ebook/design-justice

Mia Dand: Hello Sasha and welcome! What inspired you to write this book?

Sasha Costanza-Chock: Thanks Mia for having me! So, Design Justice is a term that has emerged from a growing community of practice, especially the Design Justice Network (designjustice.org). This network was born at the @alliedmediaconf and created a set of principles that really resonate with a lot of people. This network was born at the @alliedmediaconf in 2015, and then over the next few years created a set of principles that really resonate with a lot of people. The principles are here.

I describe some of this history in detail in the Introduction to the book, which is freely available on the site. Other inspirations include my own lived experience as a trans* person, and my work as an educator.

Of course, there’s a very rich and deep history of theory and practice about how design might be more aligned with liberatory politics, community control, and ecological survival; throughout the book I’m in conversation with various bodies of work about design and community organizing.

I also get into this question further in my recent interview with @IdeasOnFire.

MD: Let us start with some critical concepts from your book. How do disaffordances and dysaffordances shaped by matrix of domination show up in AI technologies?

SC: Great question! Design Justice urges us to consider how design affordances and disaffordances distribute both penalty and privileges to individuals based on their location within the matrix of domination. The matrix of domination is a term developed by Black feminist scholar, sociologist, and past president of the American Sociological Association Patricia Hill Collins to refer to race, class, and gender as interlocking systems of oppression.

For example: Kindle for Kids requires a binary gender selection, see https://twitter.com/schock/status/1250866505962737664?s=20

COVID-19 Voice detection: https://twitter.com/tamigraph/status/1245110643314683904

MD: You used the Gates challenge as an example, can you share more about the harms of parachuting technologies and technology into a community.

SC: Tech solutionism is the ideology that constantly says ‘there’s an app for that!’ It’s deeply broken. It comes from a place of extreme privilege, erasure of people’s lived experience as domain expertise, and it is fundamentally harmful.

In the book, I talk about the Gates Reinvent the Toilet Challenge as one example. It’s re-invent-the-wheel-ism that doesn’t set parameters correctly and ignores decades (millenia!) of viable alternatives. @NextCity has published this excerpt: https://nextcity.org/daily/entry/to-truly-be-just-design-challenges-need-to-listen-to-communities

In general, in the design justice community we are inspired by a slogan from the Disability Justice movement, ‘Nothing About Us Without Us!’.

MD: What is the danger in the underlying assumption that fairness means treating all individuals the same (vs. providing redistributive action)?

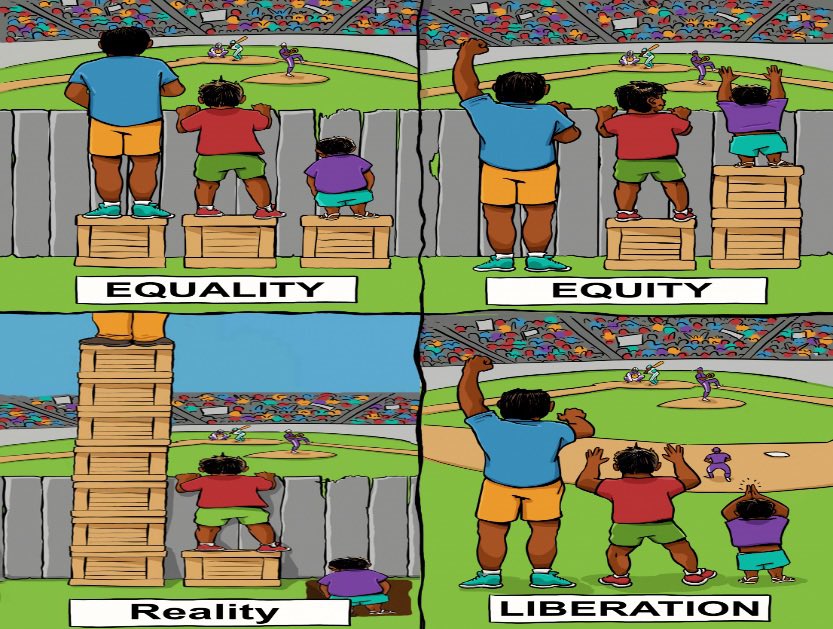

SC: Treating all individuals the same ignores the differences between individuals, as well as the social, historical, and structural forces that shape our life chances. I like this graphic and description, by @MalindaSmith

Unfortunately, a lot of the developers of technical systems, including AI, are operating according to a simplistic understanding of ‘fairness’ as ‘treating all individuals the same’ (the first image in the graphic).

In the realm of AI, this means that automated decision support systems (ADS) in domains including education, employment, healthcare, criminal justice, and more are all being developed to reproduce existing inequalities. See https://pretrialrisk.com & work by @mediajustice @mediamobilizing

To take a #COVID19 example, nurses, doctors, and health practitioners are organizing against proposed crisis standards of care in Massachusetts that are racist, classist, and ableist, under the guise of ‘treating everyone the same.’

I wrote a thread about this, called “Algorithmic Necropolitics: Race, Disability, and Triage Decision making;” you can find it here.

MD: How can Design Justice principles counter tech-centricity, solutionism and reproduction of white cis male tech bro culture?

SC: The DJN principles can provide helpful reminders or guideposts to counter that often unwittingly sexist, racist, classist, ablist tech bro culture. But principles alone can’t transform the ways we design and develop tech.

We definitely need people to take individual responsibility for shifting the ways we work to design and develop the technologies that will shape our futures. But individual action and abstracted principles aren’t enough.

We need organizational and institutional shifts; pedagogical shifts in how we teach and learn computer science, design, development; shifts in accelerators and in funding; political transformation in tech priorities, in assessment, in auditing mechanisms.

I have had multiple students at MIT tell me that their intro level CS classes still contain problem sets where they must make a dating algorithm to pair male students with female students, and same sex pairing or nonbinary gender were explicitly disallowed.

The immense mobilization of resources by nation states, private sector, and grassroots networks to solve sociotechnical challenges around the current COVID-19 crisis provides a window into the possibilities of need driven R&D, although it’s complicated.

In the U.S., we HAD mechanisms for public oversight of technology development in the past, like the Office of Technology Assessment, that were eliminated. There’s growing bipartisan support to bring that back.

MD: Do you think the pandemic will help or hurt the #TechWontBuildIt mobilization?

SC: On the one hand, the pandemic lays bare deep structural inequalities and broken systems, and makes it possible to imagine the radical types of transformation that we so desperately need: universal basic income, universal health care, universal internet access, and so much more. That includes the conversation about #TechWontBuildIt, the need for #PlatformCoops, the ways that @gigworkersrising and many others have highlighted the need for workers at Amazon, Instacart, and throughout the gig economy to organize, mobilize, and strike for their rights.

But on the other hand, it’s a moment where what @NaomiKlein calls the Shock Doctrine is in full effect. Crisis capitalists are already pouncing on the opportunities to make all kinds of money in a pandemic situation. Instacart shows no signs of shifting its horrendous worker practices.

Arundhati Roy says that the pandemic is a portal. We have a chance to organize together to leave as much of the baggage of the matrix of domination behind, but without focus and work, we’ll reproduce it – or make it worse.

The design justice community is just one of many communities that are striving to care for each other, develop new possibilities, and dare to imagine the radical transformations in process and in products that we need to create, just, survivable worlds.

Audience question via Buse Çetin @BuseCett

What does moving away from ‘algorithmic fairness’ to ‘algorithmic justice’ imply for algo auditing? (can you also tell a bit about the need to shift from ‘fairness’ to ‘justice’?) Thank you!!

SC: Here I would point to the phenomenal work of @jovialjoy and the Algorithmic Justice League, @AJLUnited The short answer is that it is deeply contextual. There is no silver bullet. In some cases intersectional audits can help shift AI systems to be more truly just; in others, ‘inclusion’ is a thin screen for more accurate and insidious automated oppression. For example, in the Gender Shades project (http://gendershades.org) and in the poem/audit ‘AI, Ain’t I A Woman? @jovialjoy demonstrated how gender classification fails hardest on darker skin toned female faces. https://youtube.com/watch?v=QxuyfW BUT that doesn’t mean her main focus is to advocate for more inclusion in potentially harmful systems.

Demonstrating technical bias in AI systems can be a powerful argument against deployment. See @AJLUnited extensive policy testimony https://ajlunited.org/library/policy PS so instead of thinking we can ‘solve’ algo audits as long as long as they’re intersectional, we have to look at each algo in context, consider human-in-the-loop analysis, consider when the answer is a moratorium or ban

Audience question via B Nalini @purpultat

How does design justice reconcile with the speed of tech dev? Is a balance and/or harmony possible?

SC: ‘Move Fast, Break Things’ has led us to a lot of broken things. Community-led processes to build the worlds we need may take longer, but it’s worth it. Can that really happen under capitalism though? You decide 😉

In conclusion, if you take just 1 thing away, I’d say be sure to ask yourself the following throughout any tech design process: Who participated? Who benefitted? Who was harmed?

You can check out free chapters of the book at design-justice.pubpub.org; order the book from @MITPress at https://mitpress.mit.edu/books/design-justice the book from @MITPress at https://mitpress.mit.edu/books/design-justice, learn more at http://designjustice.org.

Join us again next month Sunday, May 31 on Twitter at 11a PDT for our May #AIEthics #TwitterBookChat where we discuss “Artificial Unintelligence” by author Meredith Broussard @MerBroussard

Get the book => https://indiebound.org/book/9780262537018