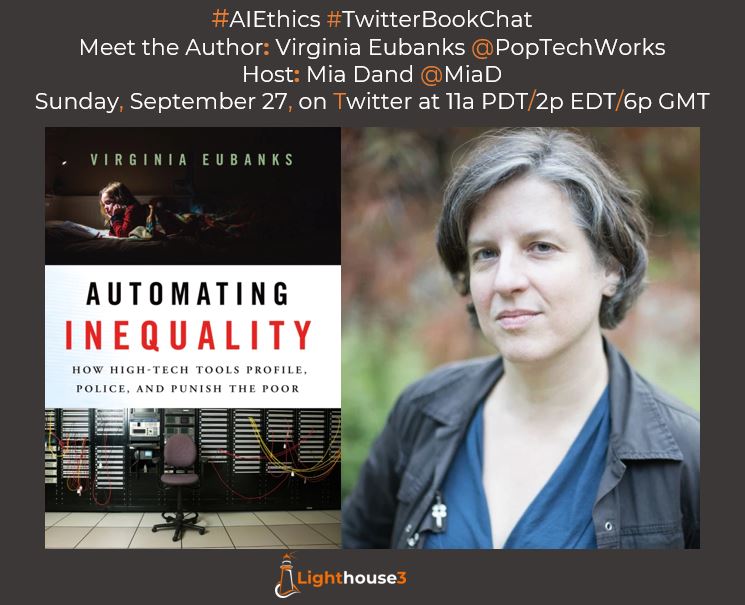

In September, we invited Virginia Eubanks @PopTechWorks, the reknowned scholar and author of “Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor” to discuss her powerful book. #AIEthics #TwitterBookChat

In September, we invited Virginia Eubanks @PopTechWorks, the reknowned scholar and author of “Automating Inequality: How High-Tech Tools Profile, Police, and Punish the Poor” to discuss her powerful book. #AIEthics #TwitterBookChat

Mia Shah-Dand: Thanks for joining us, Virginia! As someone who grew up poor, your book resonates with me on a very personal level. How did your personal trauma and experience with the insurance company influence your perspective (and this book)?

Virginia Eubanks: I grew up professional middle class, though my mom was a class migrant who was born working class and moved to the PMC, at a not-insignificant cost. But, like the majority of people in the US (64%), I’ve done spells on public assistance. For me, it was Universal Life Line (reduce price access to telephone service), and more recently, I’ve been navigating the disability system with my partner, who has bipolar and PTSD. The insurance company red-flagging actually happened after I had finished most of the research. and begun the manuscript. So it was less of an inspiration for the book and more several lines drawn under my central point: that the impacts of digital rationing of basic human rights like healthcare can be terrifying and life-changing. That’s a lesson I learned from 20 years as a welfare rights organizer.

MS: In your opening introduction, you say George Orwell got this wrong. “Big Brother isn’t watching you. He is watching us.” Can you please expand on this?

VE: The way Orwell imagined surveillance (i.e., Big Brother watching *you,* as an individual) keeps us from understanding that digital surveillance often operates through social sorting, grouping “likes” with “likes.” Predictive analytics, e.g., is at heart about grouping people together and guessing how they might behave, based on how people “like them” have behaved in the past. Which obviously opens the door for all sorts of bias, false assumptions, spurious correlations and all kinds of other statistical violence that lands with particular force on marginalized people: ppl of color, migrants, sexual or religious minorities, poor ppl, etc.

MS: That was helpful context re: digital surveillance. “Digital poorhouse reframed political dilemmas as mundane issues of efficiency and systems engineering.” Was this reframing for plausible deniability or intended to sell more technology?

VE: If you look at the history, what I call the digital poorhouse rose at the height of the power of the welfare rights movement, especially when social workers started siding with – and striking for — the rights of – their clients. I don’t know that reframing political issues as admin issues was an intentional sales job, these techs operate to solve a political “problem”–the extension of welfare system to more than 50% of people who actually qualified, among them single moms and women of color.

MS: Would it be accurate to say that “reverse redlining” is a rational way to exploit those who are otherwise excluded from opportunities?

VE: Exploitation has always been part of how the US deals with poverty. What reverse redlining makes visible is how limited our frameworks around fairness and equity can be when they stop at just wanting to be “included” in existing systems, instead of transforming them. We’ve certainly rationalized ways to exploit poor and marginalized people in the last few decades. Here’s a more recent story I wrote about digital government debt collection, for example: https://www.theguardian.com/law/2019/oct/15/zombie-debt-benefits-overpayment-poverty?CMP=share_btn_tw Another example of this would be the RoboDebt scandal in Australia. See The Guardian’s great coverage of it: https://www.theguardian.com/australia-news/2020/dec/18/robodebt-victim-refunded-more-than-56000-in-erroneous-centrelink-debts and the social movt response: notmydebt.com.au/auwu

MS: I have to confess that I cried through many parts of your book. We often hear about “human in the loop” in AI discussions. How does one reconcile this with many examples you shared of case workers/humans with bigotry and unconscious racial bias?

I cried researching and writing many parts of the book, Mia. And this is a complicated question. Racism and classism at the front lines of the public assistance has plagued the system since it was created. But I have no faith that these new digital system are any less racist or classist. In fact, I fear that their bias is just more deeply embedded, more structural instead of individual. And I’m also suspicious that these new algorithmic tools are displacing the decision-making of *frontline* workers. The most female, working class, and racially diverse part of the social service workforce.

MS: Here’s what I found very disturbing as a parent – models like VI-SPDAT, AFST seem arbitrary and often punitive ex: penalizing “parenting while poor” (vs. poor parenting). Are you worried these are being replicated in powerful AI models?

VE: There is nothing arbitrary about these tools. They are patterned – and pattern-seeking. I think of them as manifestations of social structure, which is one of the things that makes them so interesting to research and write about. And yes, I absolutely think all kinds of ridiculous and false narratives about the poor – that they are lazy, irresponsible, criminal, fraudulent, bad parents, etc etc, are being replicated in a wide variety of algorithmic decision-making tools. whether they’re ML or AI or just a checklist. And those false narratives drive policy and tech that are primarily punitive and exploitative. Perhaps what is so frightening to me about these assumptions being built into digital tools is their potential scale and speed.

MS: Vilification, exploitation of the poor is truly abhorrent. What do systems engineers get wrong about solving homelessness and what are the 2 questions you ask data scientists and engineers who ask you for advice?

VE: The single thing all the designers, policy makers, and systems engineers I spoke to for the book (I did 100+ interviews!) agreed on was that these tools are sometimes regrettable, but necessary, ways of doing “triage.” The assumption being there aren’t enough resources for everyone and we need to use these systems to make “hard decisions” more fairly. The assumption of scarcity is empirically false and dangerous as hell. In fact, we’re not doing triage with these tools – triage assumes that the crisis is temporary and there are more resources coming – what we’re doing is digital rationing. The two questions I ask engineers to ask themselves are: Does this system support the autonomy and dignity of the poor? Would this system be acceptable if it was aimed at non-poor people? If the answer to either of those questions is no, you shouldn’t build it.

MS: Many underestimate their odds of falling into poverty. You’ve said that it’s upto the professional middle class to save us from digital poorhouse as they’re most at risk. Do you see any signs of them overcoming their “fear of falling”?

VE: People closest to problems have the most info about them and are most likely to be invested in creating lasting/transformative solutions. Poor people’s movements will be the force that changes the digital welfare state. But PMC (Professional Middle Class) have a role to play, too. If two-thirds of Americans will access means-tested public assistance between the ages of 20-64, the PMC needs to understand that WE WILL HAVE TO LIVE in the systems we build (or allow to be built) for the poor. We’ve all got skin in this game, though the stakes and consequences aren’t the same for everyone. The pandemic has made so many of the cracks in the system visible to those who think of themselves as “not the kind of person to go on welfare.” My hope is that we can use this moment to build real multiracial, cross-class coalitions to turn back the punitive and deadly changes I describe in my book.

Audience question from Wangui Mwangi @jackie_mwangi Are we giving too much power to developers? What can law and institutions do?

VE: In the case of public assistance in the US, I think policy and developers are fairly aligned, unfortunately. You can make a great political career in the US whipping up resentment against welfare. That’s why I think the change will come from poor ppl’s movts. One of my favorites is the Poor People’s Economic Human Rights Campaign, a national coalition of poor-led orgs based in Philadelphia (poorpeoplesarmy.com).

MS: Thank you so much for joining us and sharing your wisdom, Virginia Eubanks! Here’s a link so folks can buy your book from an independent bookstore.